During the 2016 general elections, a bodyguard attached to the Go Forward Campaign of ex-Prime Minister Amama Mbabazi disappeared.

Later, images were circulated online of a corpse which the family identified belonging to Mr. Christopher Aine. His twin sister told newspaper reporters that a scar and a gap between his teeth convinced her that the deceased person was in fact Aine.

The affair played dangerously on the campaigns of the candidates which were tense and as with many elections, defined by violence and claims of violence. There was the explosive affair regarding the death of People Power supporter Ziggie Wine over claims of torture versus a motor accident.

In each of these cases and countless others where the public relies on their opinion of events on images, audio and other publication, the line between the truth and its manipulation has long been thin. It is about to get considerably worse.

Recent advancements in artificial intelligence, which can create fictional situations that cannot be distinguished from reality without investigation. Where governments, political organisations, criminal outfits and individuals have sought to manipulate public opinion, such as the data mining firm Cambridge Analytica’s use of FaceBook data for messaging during the 2017 Kenyan election, AI has now opened the gates to a dangerous new period for democracy and human rights.

The AI algorithm has the ability to alter what one is able to see in their feed and thus shaping the narrative without regard to facts – this is a new game changer.

Deep fakes created with AI can bend reality. They are capable of depicting people doing or saying things they didn’t say or do or conjure up events that didn’t really occur. As such in an election a voter can be misled about what candidates in question are asserting, their positions on issues, and even whether certain events actually happened.

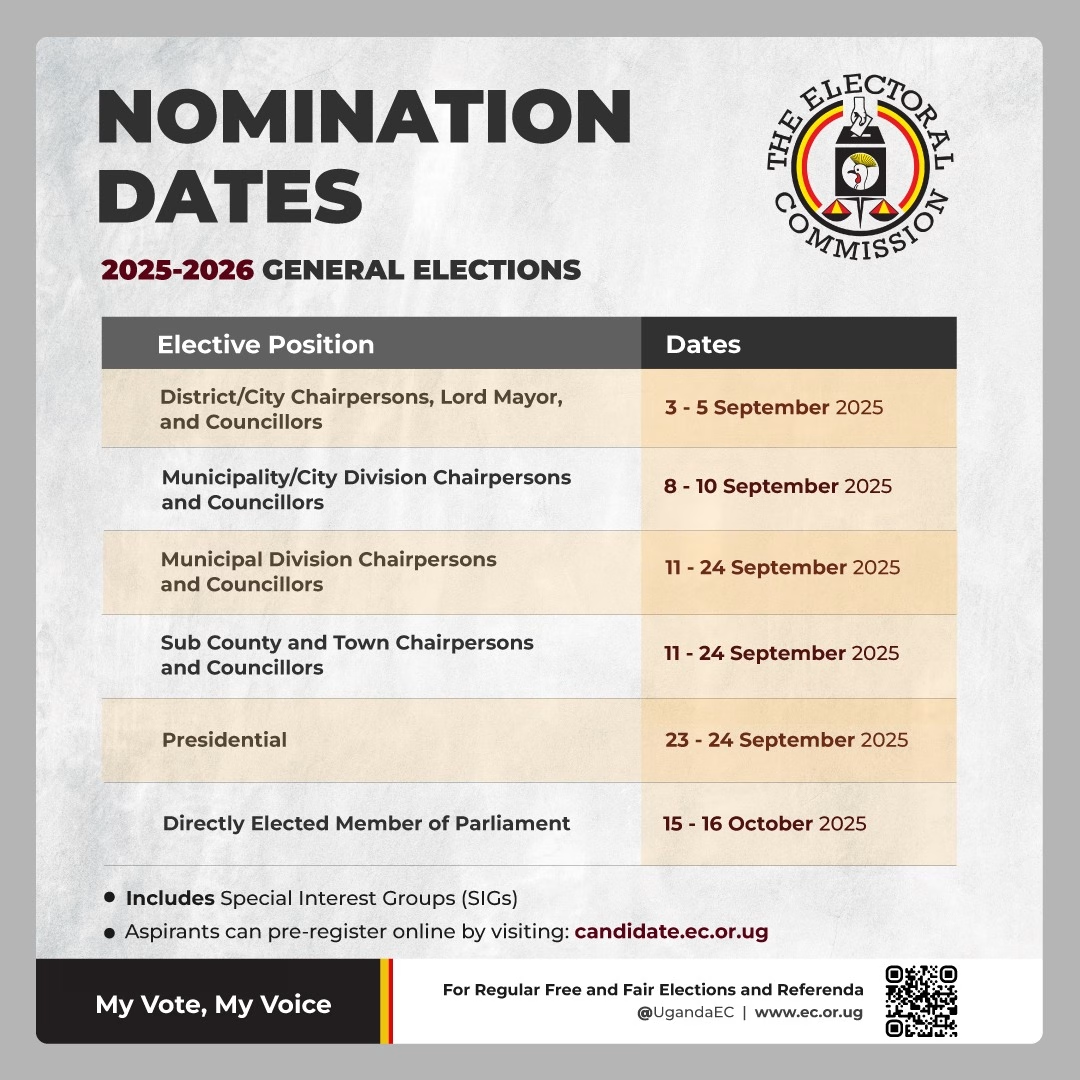

2025 will be the first pre-election year to feature the widespread influence of AI content during and after elections. If left unchecked, fraudulent and deceptive uses of AI could infringe on Ugandan voters’ fundamental right to make informed decisions. AI could be used to manipulate the administration of elections, spread disinformation to suppress voter turnout or distort the result.

Very few Ugandans have the training or inclination to verify every source of information let alone crucial information about candidates and processes such as voting results.

Disenfrachisement of voters by introducing doubt about candidates and electoral processes using all manner of dirty tricks have long been in use globally. This kind of underhand and nefarious trickery will now be amplified by machine learning and AI dripfed to millions of smartphones in the country.

Recently, in a hotly-contested and violent election in Pakistan, a candidate was able to use AI to address his supporters from his jail cell. It is a brave new world.

Moreover, since the last four general elections have ended up in court, AI disfigurement will test faith in the electoral process. That is what is what is at stake as well as the entire civic mission of elections. AI technologies could easily be deployed to manufacture fake images and false evidence of misconduct such as ballot staffing.

Manufactured misconduct can further corrode public trust in the results of elections, fuel additional threats of violence against electoral commission personnel at the polling stations and this can add fuel to the fire of already ubiquitous acts of electoral violence seen in previous elections.

In the aftermath, AI could be used to fabricate audio of a candidate claiming they have been rigged or to generate other misinformation that could persuade the supporters of a failed campaign to disrupt vote counting and certification procedures. These processes are already politicized and often serve as the basis for the effort to sabotagemany an election as seen from multiple court cases.

There is urgent need to for legislation with deterrent sentences that provide for mandatory disclaimers that would inform voters when AI is being used, forbids political deep fakes and punishes offenders. There is a global gap in regulation of AI currently, but we can learn from countries already confronting the issue like Singapore.

The Constitution provides every Ugandan with inherent human rights, yet these are most threatened during the election period. The right to vote, freedom of assembly, expression and privacy, among others, have evolved with technological advances.

Political gatherings need not be physical, free speech is now mostly online and in closed communities or safe spaces and privacy is no longer the default as Ugandans have been conditioned to share everything and be surprised by nothing in the digital universe.

If the government, parliament, civil society do not act now when the writing is on the wall the threats to the constitution and civil order are on us.